Welcome to this edition of Loop!

To kick off your week, we’ve rounded-up the most important technology and AI updates that you should know about.

HIGHLIGHTS

Anthropic releases the hidden prompts that control Claude

How easy it is manipulate AI search engines

Meta’s internal project that’s developing AR glasses

… and much more

Let's jump in!

1. Intuitive Machines win $117 million contract to explore Moon’s south pole

NASA has awarded the company a huge contract and they will send another lander to the planet in 2027.

Intuitive Machines made history in February of this year, as it landed a privately built robot on the Moon for the first time.

This new mission will deliver six payloads, with a focus on exploring the planet’s south pole.

Instruments will be used to better understand the Moon’s environment, particularly the location of water, ice, or gas.

The south pole is a high priority for NASA’s scientists as water ice has been detected there, but it also poses a significant number of challenges for landing.

The terrain is rough, much of it is covered in shadows, and the temperatures are extremely low - which can impact equipment and cause them to stop working.

2. Google reveals an AI that can listen for signs of sickness

The company’s researchers have created Health Acoustic Representations (HeAR), which listens to human sounds - such as coughs or breathing - and identifies the early signs of disease.

Their tool has been trained on 300 million audio samples, including 100 million cough sounds.

But that’s a huge amount of data, which is tricky to get hold of in the medical industry. This is because you need the patient’s permission and then have to anonymise that data.

It’s incredibly expensive to collect this data, which limits the number of companies that can realistically do this.

Interestingly, HeAR can still perform well when there is a lack of training data. That’s great news for researchers who want to customise it and detect other diseases, since they won’t need to collect millions of samples.

Salcit Technologies, an India-based healthcare company, is already looking at how HeAR could be used to detect tuberculosis at an early stage.

Health products are heavily regulated for obvious reasons and take a long time before they come to market, but Google’s work could lead to significantly cheaper testing for diseases.

If you could just use a phone to record someone’s cough, it would allow millions to check their symptoms at home and then decide if they should visit a doctor. Detecting those issues early could save a lot of lives.

3. Brazil has banned X for ignoring court orders

X, formerly known as Twitter, has been banned in Brazil after it failed to meet a court order.

Brazil has taken a unique approach to how social media companies are policed.

In 2022, one judge was given significant powers by the Supreme Court and can order the removal of posts that break the law.

This was implemented ahead of the country’s election and an experiment that was initially praised as a success.

It contrasts with the approach taken by Western nations, which have encouraged social media companies to police themselves.

That view is starting to change in the EU and elsewhere, as new laws have been introduced - such as the Digital Services Act.

4. Apple and Nvidia are planning to invest in OpenAI

The new funding round could value OpenAI at $100 billion, with Apple and Nvidia currently leading the talks.

It’s not surprising to see that Nvidia wants to get involved, as they have invested heavily in other AI companies - many of them focusing on robotics.

But it’s an unusual move by Apple, who traditionally buy very small startups and then integrate them into their services.

However, Apple has been behind the other companies when it comes to AI development.

OpenAI has led a lot on Generative AI technologies - such as text and video - while Google DeepMind is leading on more focused projects, as seen with their healthcare research.

Yes, Apple is now integrating OpenAI’s models into the next version of iOS. But they’ll need a better view of what’s coming down the track.

With the new iPhone launch next week, it’s possible we will learn more at the event.

5. Meta is working on AR glasses, but they’re probably years away

Codenamed Puffin, the new device will look similar to a large pair of glasses - positioning them between the Ray-Ban smart glasses and the heavier Quest 3 headset.

Although, it’s worth stressing that this is still an active research project and it could be changed as time goes on.

There’s a lot of terminology between Augmented Reality (basically glasses that overlay graphics on top of the real-world) and Virtual Reality (a big headset that closes you off from the real-world).

Meta has invested tens of billions into AR/VR devices, which they brand as the “metaverse”. The scale of investment and lack of pay-off has provoked scepticism from investors.

These devices do have a lot of promise to change how we interact with technology, but there are too many challenges right now.

We can’t make them small enough to look like traditional glasses. The batteries are too bulky and heavy. And expensive screens are needed to show the content.

It’s unlikely we’ll see progress in this area for a while, but Meta has pushed parts of the AI sector forward with their research. I’m hopeful they can do the same with AR/VR, but we should be clear-eyed about the challenges that remain.

How to manipulate AI search engines

In the last few years, we have seen companies unveil their own “AI search” features. We can talk to chatbots and ask them questions about people or documents that we uploaded.

Perplexity is the most famous startup in this sector, which I covered in more detail here, and Google has also released their own tool.

These chatbots use the data that they have been given to return those answers. So, if they have been given information that shows you in a negative light - then they will reinforce that in their answers.

Kevin Roose, a journalist from The New York Times, recently found that the chatbots reacted quite negatively when they were asked about him.

This follows Roose’s investigation into BingChat, which grabbed headlines as it encouraged him to leave his wife. Soon afterwards, Microsoft heavily restricted the chatbot to stop this behaviour.

Since there were so many stories about Roose and the chatbot, this data was fed into the latest LLMs we have today - which explains why they reacted negatively to his name.

That raises the possibility that people can “game” the responses for future models. If chatbots blindly accept what is written down, we could encourage them to recommend one product over another.

Or we could make up dozens of fake stories and damage a person’s reputation. Tech companies can combat this by only using “trusted” sources for this information, but they’re in a fierce race to collect more and more data.

As a result, it’s inevitable that data created by malicious actors will be used to train these models. That’s a worrying thought and I don’t see how this will get better anytime soon.

Anthropic publishes the system prompts that control Claude

If you’re working on a project that uses Generative AI models, or just want to learn more about how they’re setup by the top companies, you should check out Anthropic’s latest resources.

The startup has published the system prompts for many of their Claude models, which provide it with guidance on how it should respond to users - alongside topics it should avoid entirely.

It’s a good way to understand how these models are prompted and use it in your own work.

Of course, OpenAI and Anthropic will have a lot of other systems in the background - which aren’t visible to the public.

These are used to categorise your message and detect malicious behaviour. Companies like Microsoft and AWS have started to make these available to enterprise customers, so that they can do the same.

You can also find hidden prompts for other models here, such as those built by OpenAI and Meta.

🤖 Amazon hires founders from an AI robotics startup, known as Covariant

🎮 Startup uses generative AI to power NPCs in new video game

🚗 Uber invests in South Korea, as it tries to take on Kakao Mobility

💻 Magic raises $320 million for their GenAI coding tools

⚖️ France charges Telegram’s founder for enabling organised crime

📱 Apple will soon let you use AI to remove objects from pictures

🚙 Polestar's CEO has been removed, following a decline in EV sales

🕵️ Chinese government hackers have targeted US internet providers

🗣️ Amazon's Alexa voice assistant will start to use Claude

🍽️ Samsung will add AI features to its meal planning app

Agency

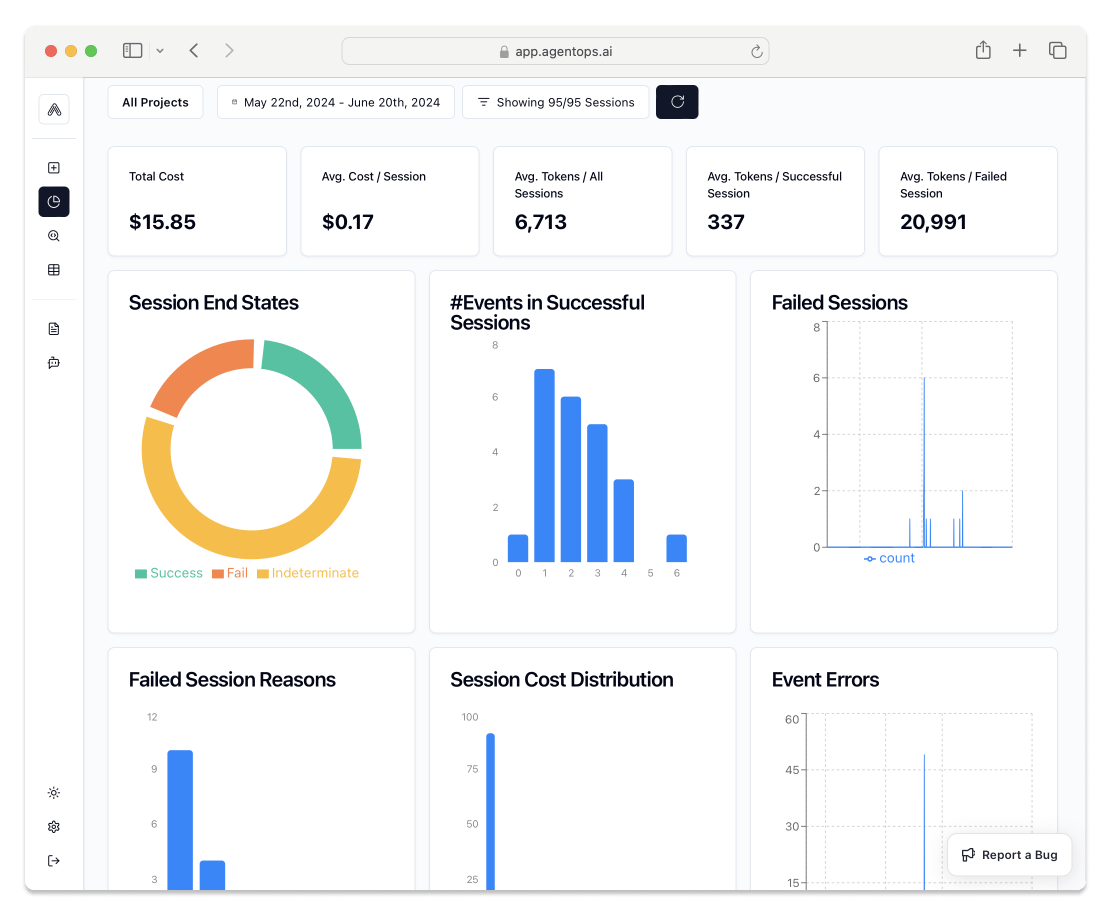

AI agents are becoming more prevalent and companies are starting to explore the different ways they could be used.

This startup has created debugging tools for agents, which allow you to spot issues and quickly fix them.

The company is based in San Francisco and has raised $2.6 million to further build the AgentOps product.

Debugging is a really important area for agents, since they have more control over the process and it’s easier for them to go off-track.

Developers need that visibility to understand why they made a decision and can then change the prompt to prevent it happening again.

Agency’s platform can integrate with most of the popular agent frameworks, such as Microsoft’s AutoGen, CrewAI, or AutoGPT.

Given that AI agents are a new technology and can be difficult for some companies to navigate, they also help them to build their own.

For those just getting started, I’d suggest using AutoGen as it’s very beginner-friendly. For more complex workflows, you can try LangGraph Agents.

This Week’s Art

Loop via Midjourney V6.1

The standout story from last week has to be Google’s HeAR project, which uses audio of you coughing to identify the disease you might have.

There’s no doubt of the potential here, especially when you consider that very little data is needed to use it for detecting other illnesses. I’m really looking forward to see how that technology progresses.

We’ve covered a lot this week, including:

Intuitive Machine’s upcoming Moon mission

Google’s research into AI models that can listen for illnesses

Why X has been banned in Brazil

Apple and Nvidia’s talks to invest in OpenAI

Meta’s internal work on AR glasses

How to manipulate the responses from AI search engines

Anthropic’s release of the system prompts that restrict Claude

And how Agency are building debugging tools for your agent workflows

Have a good week!

Liam

Share with Others

If you found something interesting in this week’s edition, feel free to share this newsletter with your colleagues.

About the Author

Liam McCormick is a Senior AI Engineer and works within Kainos' Innovation team. He identifies business value in emerging technologies, implements them, and then shares these insights with others.